POINT SPACE

A WARF funded project to take research in simulating physical environments out into the real world

Approach

In computer graphics, we make trade-offs between interactivity and realism. While we know how to make computer graphics that are realistic, they often take a long time to compute. Alternatively, we know how to make things interactive, but can’t approach the visual complexity of real-world environments. However, what we want is the best of both worlds.

Real-world environments are particularly challenging because the clutter and chaos of real-world spaces does not map well to mathematical models.

Capture

Capturing the real-world

People often turn to using capture technologies, such as LiDAR scanning to capture real world environments. Datasets are captured as a series of points in 3D space, which is referred to as a pointcloud. These highly realistic pointclouds often need to be converted into lower detail triangulated meshes for interactive visualizations.

Advantages of Point Clouds

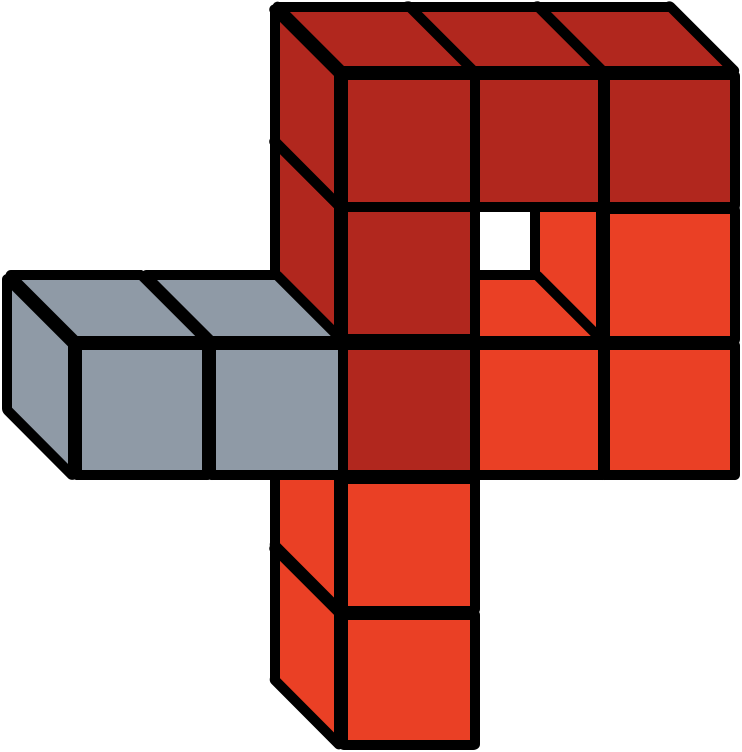

Comparing the mesh rendering of an environment (Left) shows that many details are lost compared to the original point cloud file (Right).

Challenges of Point Clouds

As points do not have volume, controlling the sampling of points when rendering can be difficult. The figure above shows four different sampling techniques used in a standard point cloud rendering system. As shown, the surfaces either appear blocky or see-through.

What is unique about our approach?

We build a way to store and render data, through the use of volumetric cubes.

Our method captures and stores data using volumes as opposed to points. This enables data to be stored and visualized at the detail at which it was captured.

Our novel rendering methods utilize the power of the graphics processor to draw many volumes in parallel ultra fast.

Standard Approach

Our Approach

Integration

Built for the Unity Game Engine

Unity is a premier software for creating games and interactive immersive experiences. Our methods plug directly into the Unity rendering algorithms, allowing them to be deployed on any number of supported platforms.

Examples

Comparison video between a standard mesh render and our developed techniques.

Visualizing data captured from laser scanning. More information on the capture methodologies can be found at http://pages.discovery.wisc.edu/vizhome/

Visualizing data captured from from cameras as part of project to capture underwater shipwrecks with the National Parks Service.

Proof of concept captures that utilize the new LiDAR scanning technologies on the recently released iPhone and iPad models.

Traction

The Pointspace project has received over $3.5 million in funding so far from the following organizations:

The project has also recieved two issued patents with a third patent pending.

Team

Kevin Ponto

Associate Professor

Expert in Virtual Reality

Ross Tredinnick

Staff Programmer

Background in the Game Industry

Connect

We are seeking connections in:

The Gaming Industry

- Software Developers

- Design Studios

- Graphics Card Manufacturers

Construction and Real Estate

- Building Information Modeling

- Architecture and Design Firms

- Property Management